1. Motivation & Data

This project started as an attempt to understand how much we can rely on pre-trained models, and whether semi-supervised learning can meaningfully reduce the need for labeled data without destroying performance.

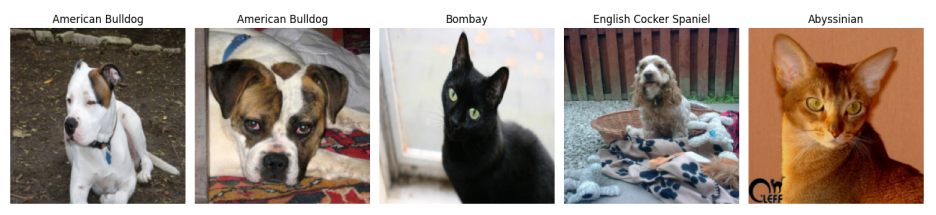

My team and I worked with the Oxford-IIIT Pet Dataset, which contains 37 different cat and dog breeds. While it may sound simple at first, the dataset is surprisingly challenging — many breeds look extremely similar, while images within the same class can vary significantly.

Sample images from the Oxford-IIIT Pet Dataset illustrating fine-grained differences.

3. Transfer Learning with ResNet50

All experiments were based on a ResNet50 convolutional neural network pre-trained on ImageNet. We began with a simple binary classification task (cat vs. dog), using feature extraction by freezing all convolutional layers and training only the final classification head.

From there, we extended the model to predict all 37 pet breeds and explored different fine-tuning strategies, including training multiple layers simultaneously and gradual un-freezing, where layers are incrementally unfrozen during training to reduce overfitting.

4. Semi-Supervised Learning: FixMatch

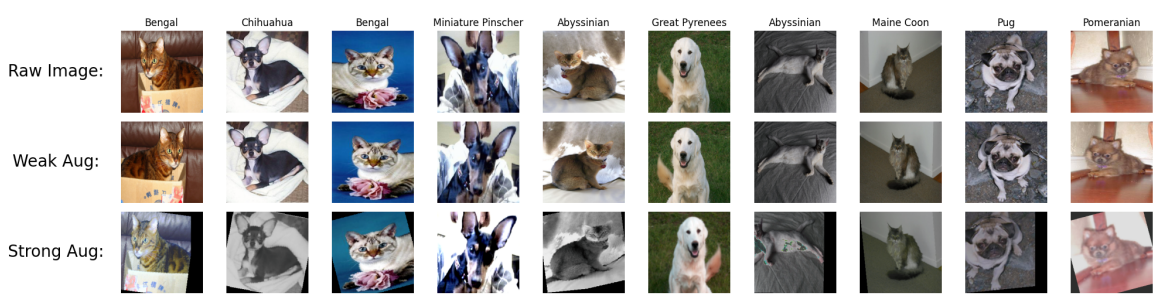

To push the project further, we implemented the FixMatch semi-supervised learning algorithm, introduced by Sogn et. la (2020). FixMatch combines pseudo-labeling with consistency regularization, using weak and strong data augmentations to learn effectively from unlabeled data.

The idea is simple but powerful: if the model is confident about a prediction on a weakly augmented image, it should make the same prediction for a strongly augmented version of that image.

Weak vs. strong augmentations used by the FixMatch algorithm.

5. Results

Overall, the experiments demonstrate that transfer learning with a pre-trained ResNet50 model is highly effective for both binary and fine-grained image classification on the Oxford-IIIT Pet Dataset.

-

Binary classification (Cat vs. Dog):

Feature extraction alone was sufficient to reach very high performance, achieving 99.29% test accuracy. This confirms that pre-trained ImageNet features transfer extremely well to simple classification tasks. -

Breed classification (37 classes):

Training only the final layer reached ~89% test accuracy, while fine-tuning deeper layers significantly improved performance. The best results were obtained using gradual un-freezing, which reduced overfitting compared to simultaneous fine-tuning. The final model achieved a test accuracy of 92.37%. -

Fine-tuning strategies:

Gradual un-freezing consistently outperformed simultaneous fine-tuning, with optimal performance when unfreezing up to 3-4 layers. Fine-tuning too many layers at once led to overfitting, it was indicated by declining validation accuracy. -

Imbalanced classes:

Class imbalance reduced test accuracy to 86.15% when left unaddressed. Applying weighted loss functions or weighted sampling improved results to ~89.7%, although neither method fully compensated for the imbalance. -

Semi-supervised learning (FixMatch):

FixMatch substantially improved performance in low-label regimes. With only 2 labeled images per class, accuracy increased from ~12% (supervised only) to 72.9%. Even with just 1 labeled image per class, FixMatch achieved ~50% accuracy, while the supervised baseline failed.Images per Class Labeled Data (%) Supervised Only FixMatch 50 60% 89.48% 90.35% 20 25% 88.42% 87.64% 10 12.5% 70.24% 86.41% 2 2.5% 11.99% 72.91% 1 1% 5.56% 50.15% Test accuracy comparison between supervised learning and FixMatch under varying amounts of labeled data.

These results highlight the importance of both transfer learning and training strategy: while pre-trained models provide a strong foundation, careful fine-tuning and semi-supervised techniques are critical when labeled data is scarce.

On a personal level, this project helped me better understand how neural networks behave during training. It gave me hands on expirience, which I plan to use in my future projects.